It's another terminology post! Earlier this week I was having a delightful lunch with Angela Henry, Kevin Feasel, Javier Guillen, and Jason Thomas. We were chatting about various new things. Partway thru our conversation Jason stops me because he thought I was talking about Power BI Dataflows when I was really talking about Azure Data Factory Data Flows. It was kind of a funny moment actually but it did illustrate that we have some overlapping terminology coming into our world.

So, with that inspiration, let's have a chat about some of the new data flow capabilities in the Microsoft world, shall we?

Azure Data Factory Data Flow

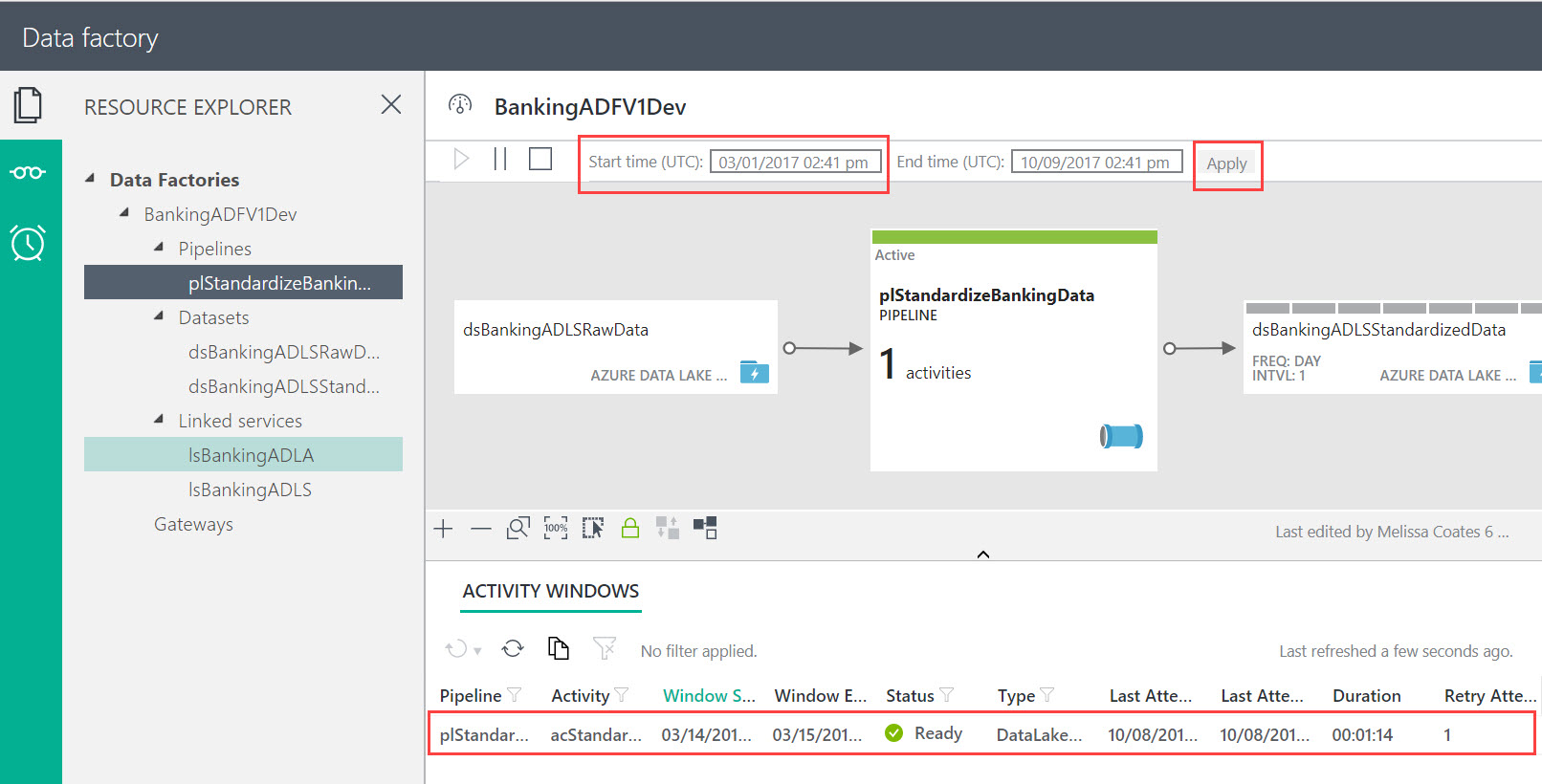

The new Azure Data Factory (ADF) Data Flow capability is analogous to those from SSIS: a data flow allows you to build data transformation logic using a graphical interface. A really interesting aspect about ADF Data Flows is that they use Azure Databricks as the runtime engine underneath -- however, you don't actually have to know Spark or Databricks in order to be able to use ADF Data Flows. The goal is for it to be a low code/no code way to transform data at scale.

Follow Mark Kromer and the ADF team on Twitter to stay up to date on the rollout of the preview.

More info on ADF Data Flow can be found here: https://aka.ms/adfdataflowdocs.

Power BI Dataflows

Power BI Dataflows (yes, this one is branded as one word) are a new type of object in a Power BI Workspace which will allow you to load data into a Common Data Model. Data is loaded via a web-based version of Power Query, which is why this capability is referred to as self-service data prep. The resulting data is stored in Azure Data Lake Storage Gen 2. Once in the Common Data Model in the data lake, it can be reused among various Power BI datasets -- allowing the data load, transformations, and cleansing to be done once rather than by numerous PBIX files. This capability was known for a little while during the private preview as Power BI Datapools or as 'Common Data Service for Analytics' (CDS-A) -- but the final name looks like it's going to be Power BI Dataflows.

It's still early so there's not a lot of info available online yet. James Serra wrote up a nice summary and has a few links on his blog. Also, here's a diagram that Chris and I included in the recently updated whitepaper Planning a Power BI Enterprise Deployment which shows our initial understanding of the Power BI Dataflows capability:

Note that Pro users can use Power BI Dataflows without requiring Premium. However, my hunch is that this capabililty will be most appealing for data at scale - i.e., the features that Premium offers with respect to Power BI Dataflows will be pretty compelling, which is why Premium is depicted in the diagram above.

SSIS Data Flow

Data flows have long been a key part of SQL Server Integration Services (SSIS) for data transformations, just like the new capability being added to ADF discussed above. As of Azure Data Factory V2, we can also host and execute SSIS packages in Azure from ADF V2.

Microsoft Flow

Just for completeness I'll cover one more product which is similarly named. Flow is an Office 365 service for workflow automation between services. It can be used in conjunction with PowerApps and Power BI for different types of workflow automation. Flow lets you do things like approval requests, sending an e-mail alert, or creating a task in a project management system.

Now you know there are multiple types data flows being launched into the world of Microsoft BI (in addition to the good old SSIS data flows we've had forever). Now you can cleverly watch out for which one is being bantered about in your techie conversations.

You Might Also Like...

Three Ways to Use Power BI Dataflows

Terminology Check - What is a Power BI App?