This is part 3 of a discussion about a new service in Microsoft Azure: Azure Analysis Services. Azure AS is a Platform-as-a-Service (PaaS) offering which is in public preview mode (as of December 2016).

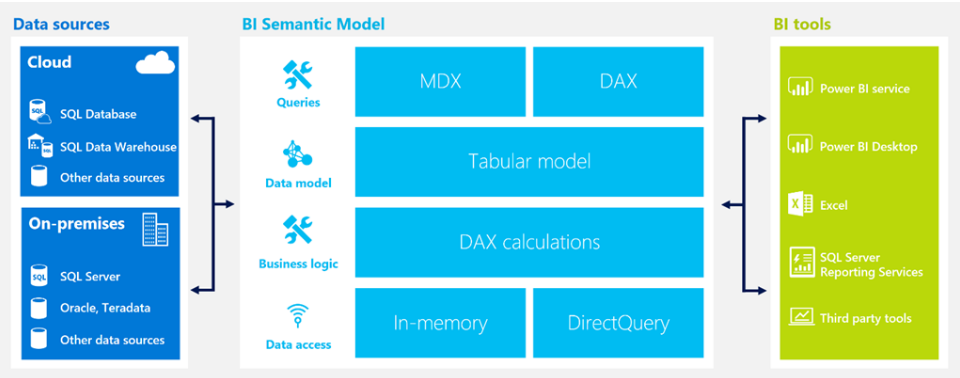

Part 1: Why a Semantic Layer Like Azure Analysis Services is Relevant

Part 2: Use Cases for Azure Analysis Services

Part 3: Where Azure Analysis Services Fits Into BI & Analytics Architecture {you are here}

From an infrastructure perspective, there are a variety of ways you can use Azure Analysis Services. Basically, you can think of it as a "mix & match" each of the 3 following options:

(1) Location of Assets

- Hybrid. This is most common for companies which are extending their existing infrastructure.

- All cloud-based. Utilization of cloud infrastructure, such as Azure services, is most common for brand new companies.

- All on-premises. N/A for Azure AS - you'll want to use SQL Server Analysis Services instead of Azure Analysis Services for a fully on-premises implementation.

(2) Data Sources

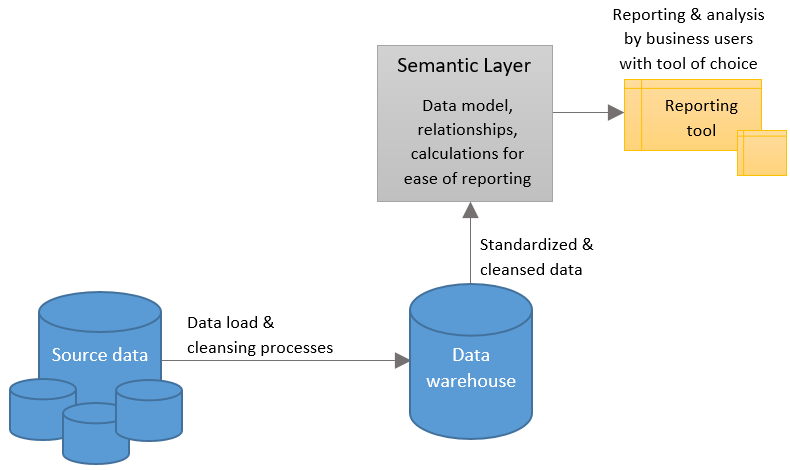

- From a single source such as a data warehouse. This is the most traditional path for BI development, and still has a very valid place in many BI/analytics deployments. This scenario puts the work of data integration on the ETL process into the data warehouse, which is the most appropriate place.

- Directly from various systems. This can be done, but works well only in specific cases - it definitely won't work well if there are a lot of highly normalized tables, or if there's not a straightforward way to relate the disparate data together. Trying to go directly to the source systems & skip an intermediary data warehouse puts the "integration" burden on the data source view in Analysis Services, so plan for plenty of time testing if you're going to try this route (i.e., it can be much harder, not easier). Note that this option only makes sense if the data is stored in Analysis Services because it needs to be related together somehow (i.e., DirectQuery mode, discussed next in #3, with > 1 data source won't work if a user tries to combine data sources because the data is not inherently related).

There is one twist to the data source options, and that is the use of federated queries. See the last example at the end of this post for more on that.

(3) Data Storage

- Stored (cached) in the AS data model. Data is stored in the in-memory model. In this case, we're using Analysis Services for its in-memory database as well as a semantic layer. This requires a scheduled refresh and provides the best performance.

- DirectQuery. Data is *not* stored in Analysis Services; rather, AS is basically a semantic layer only wherein all queries are actually sent to underlying data source(s). This is useful when near real-time data is desired. However, unless your source system is tuned for it, performance may not be acceptable.

Note that the property to specify if the AS model is DirectQuery or not is associated with the .bim file. This means that the entire model is either DirectQuery or it's not (can't choose on a data source-by-data source basis or a table-by-table basis).

Below are some common scenarios (note not all possible combinations are actually depicted below, but enough to give you ideas).

In this first scenario, we have a traditional data warehouse which has integrated data from four different source systems. The data warehouse resides in an on-premises server, and the Analysis Services semantic layer resides in Azure. Data in Analysis Services is refreshed on a schedule. Reporting is primarily handled through the semantic layer to improve the user experience.

The data sources & Visual Studio pieces are removed from the rest of the examples to simplify (though they certainly still pertain).

The following depicts using Azure AS in DirectQuery mode back to the data warehouse. In this case, the DAX or MDX (whichever is passed from the client tool) is converted to SQL, sent to the data warehouse through the gateway. Data is then retrieved and sent back securely to the client tool.

Alternatively, the DirectQuery mode could be directed at a cloud-based data warehouse. Note in this scenario that a gateway is not necessary (because AS and the data warehouse both reside in Azure).

The next scenario varies only in that the data is stored in Analysis Services, and refreshed on a schedule.

Lastly, we have the concept of federated queries. PolyBase allows us to define an "external table" in SQL Server or Azure SQL Data Warehouse and reach into data stored in Azure Blob Storage (Azure Data Lake Store support is coming soon). These external tables are known as "schema on read" because the data isn't physically stored in the data warehouse.

In the following example, we are using Analysis Services in DirectQuery mode directed to the data warehouse. Under the covers, since an external table is involved, the user queries will actually reach back farther to get the Equipment Health Data as well, though the users don't have to know that's actually happening - though they do need to be willing to accept slower performance. Federated queries like this offer great flexibility to avoid or delay data integration for data analysis which is infrequent (if the data is frequently accessed, or has progressed beyond proof of concept, you likely want to implement full data integration).

Though I didn't depict every possible combination, hopefully this gives you a good idea of ways to use Analysis Services, and where it can fit into your existing architecture. As you can tell from Part 1 of this series, I'm a big fan of using a semantic layer for a consistent and friendly end-user experience.

You Might Also Like...

Overview of SQL Server Analysis Services Tabular in DirectQuery Mode for SQL Server 2016